So much information. Such little trust.

It’s hard to overstate the impact of the internet on pretty much everything over the last couple decades. The costs of creating and distributing information have effectively dropped to 0. It has democratized consumption and publication of information of every sort. But this weird thing has happened, we’ve had this increase in information, and yet it feels like we have less trust in the institutions where much of our information comes from. We finally have access to basically all information and all knowledge, we have a means of aggregating the smartest thinking in the world...but could it be the case that there’s actually less trust, less consensus about what we believe to be true?

This lack of trust seems self evident in the day to day news. We still argue about whether or not to get the COVID vaccine, whether masks work, whether to open schools. Misinformation proliferates on social networks. Even media companies that have been around a long time are not immune to poor reporting and misinformation. Meanwhile, academia is suffering from a reproducibility problem with a certain study reporting that only ~40% of selected studies within the field of Psychology are reproducible. Indeed if we look at recent polls we can see that trust in traditional institutions like government and media are very low.

Figuring out how we can increase trust within our information networks is important. What good is information if we can’t trust it? What’s more, the power of trust at the individual level, and then consensus at the aggregate level has a lot of power. The internet allows networks to form and dissipate quickly around a cause or mission. Internet mobs can form rapidly and cancel a celebrity, or change the agenda of a private company, or incite a revolution like the Arab spring. Organizations, whether they be public or private, are more responsive to public opinion now more than ever. Collective action requires people and organizations to understand reality well. And a collective understanding of reality requires trust and consensus. Yet despite an abundance of information, there seems to be a serious lack of trust and consensus.

Of course, part of the reason for this lack is the abundance of information itself, specifically the number of different sources of information and sources of truth on the internet. This is a really great thing in some ways. It means that voices previously unheard are given the time of day. It means that we have more datasets that we can use to analyze the same problem. On balance, the increased variance in information is a net positive for the internet.

On the other hand, there are a number of net negative factors that decrease trust and consensus too. Among them, information overload, misinformation, and ad-funded media each play a role. Things like information overload make it difficult to digest information form a wide variety of perspectives, identify the highest quality information, and do the required analysis to triangulate what’s true. At least some misinformation can be chalked up to an ad-funded business model that incentivizes media that is clickbait, which is not necessarily media that is worth trusting. All of these are interesting reasons for why we have a lack of trust and consensus, and each requires its own attention and due diligence - however one of the most interesting reasons I’d like to highlight that contributes to a lack of trust and consensus is the confusion between political truth and technical truth.

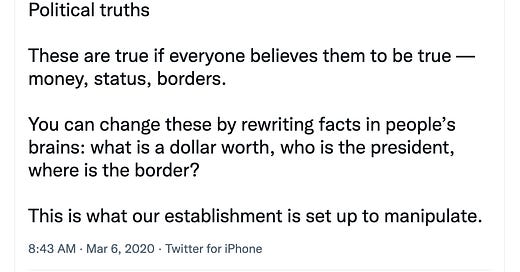

Balaji Srinivasan has a great tweet thread that distinguishes the two ideas.

So political truths are opinions, theories, or intersubjective realities that we hold to be true in order to cooperate together as a society. Pretty much all of our laws are based in political truths that have been agreed upon at some point in time.

On the other hand, technical truths are descriptions of reality. Physics, chemistry, biology, math - these are full of technical truths that largely don’t change overtime.

What is interesting is that it seems that these aren’t really binary, they exist on a sliding scale. Physics has political truths in the form of theories that have yet to be proven. At the same time, money which Balaji gives as an example of a political truth, has an exchange rate at a current point in time. It’s a technical truth that can be observed within a system, albeit a manmade one.

There is a lot more that can be said about the distinction between political truths and technical truths, where one starts, and the other begins. A lot of our life is based on bets, theories, and predictions about the future based on an incomplete dataset. Finding consensus as a collective to make important decisions depends on important political truths and the technical truths that they depend on.

But the point here is that a lot of the lack of trust and lack of consensus in our institutions comes from our conflation of these two. People think that their political truths are actually technical truths, or they feel more strongly about their political truths than they should. Like Balaji said, political truths are important - and they’re important because we live in a world of incomplete information that requires us to make beliefs, spread those to others, and triangulate in order to make the best decisions possible.

The more we embed our information and media with technical truths, the more likely our political truths will be able to create trust and consensus. When it comes to issues like the COVID vaccine, or misinformation, or the reproducibility crises that is plaguing academia and science - our lack of trust and consensus about what is true stems from a lack of technical truths about the world that we’re able to verify. That doesn’t mean that we need to get rid of political truths. But the more we’re able to agree about technical truths, the more we’ll be able to agree about political truths. It’s how we can use the abundance of information we have in order to create trust, consensus, and ultimately progress.